October 2020, Vol. 247, No. 10

Features

Can Implementing AI Make a Smart Pig Smarter?

By Rob Roberts, Director, and Steve Roberts, Director, Opportune LLP

Remember the end of Raiders of the Lost Ark? The titular ark was placed in a huge government warehouse with millions of other items – crates and shelves as far as the eye could see.

That’s about how much data pipeline companies have on every cathodic protection inspection, every drive-by visual inspection and every maintenance activity on their pipeline segments.

For those pipeline operators who took advantage of cheap digital storage to save the historical data from their supervisory control and data acquisition (SCADA), smart pipeline inspection gauges (pigs), smart balls and pipeline maintenance, a windfall may be looming.

It’s no secret that more of the oil and gas industry is leveraging technology, but for the pipeline industry, this treasure trove of data could be a boon at just the right time. The downturn has pushed companies to use any means necessary to improve outcomes and reduce costs.

Harnessing AI

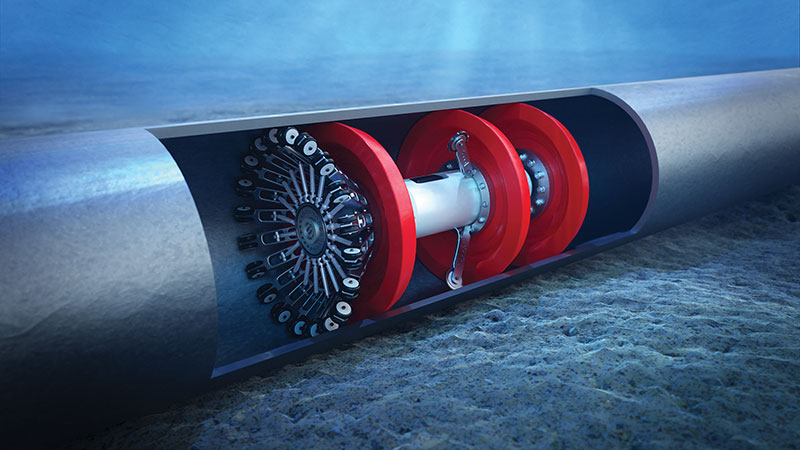

Many companies have begun experimenting with tools that allow them to get more done with less, with a special focus on artificial intelligence (AI) and related technologies. Historical data are the key bases for leveraging AI in the industry, and it’s hard to find an industry segment that generates more data on the integrity of infrastructure than the pipeline industry. Can implementing AI make a smart pigs smarter?

Preventive and predictive maintenance are the lifeblood of the pipeline industry. Any unscheduled downtime is costly from both an operational and reputational standpoint. Capturing detailed data from the various inspections, over time, will improve the predictability of maintenance requirements such that unscheduled downtime is almost eliminated.

Improvement and availability of technology has proven to be an important leap for the industry. From the acoustic analysis of smart balls to the detailed inspection capable with smart pigs, never have so much data been available for every single square foot of pipe surface and supporting systems. In the past, someone might have taken all that data and summarized the maintenance requirements for a pipeline segment. No more.

With the advent of cheap storage, extensive cloud-based computing power and tools that democratize the use of AI from the lab to the desktop, all that historical data now have a new purpose. Individually, each element of data is useful to its own end. Physical observations of the external pipeline also observe the terrain on the right-of-way (ROW).

These “eyes on-site” generally have been via driving of the pipeline ROW in a pickup or an ATV, but the increased use of satellite imagery and drones has led to more consistent categorizations of the observed locations.

By using visual matching patterns of AI and exact GPS locations, terrain shifts over months of observations can be more easily (and automatically) detected. This can prevent a sudden pipeline shift or breach related to a landslide or small tremors.

Such AI is possible because of vast amounts of data both inside and outside the pipeline companies. Imagery from the U.S. Geological Survey (USGS) and others taking images of the same areas, coupled with the historical and current geographical and geological information held by the pipelines themselves, allows the AI engine to infer impending geo-geo changes and when they might occur.

Of course, this is likely not based solely on images. With today’s cloud computing power and vast storage, AI engines can pair the imagery with regional USGS data on seismic activity to make more accurate predictions of shifts that can affect any of the pipeline assets.

Making Data Make Sense

Combining the data held internally by the pipeline company on the performance of its compressor station components with data from the manufacturer adds a level of insight only available when data are shared among key stakeholders.

With the computing power of the cloud and the data exposed to all interested parties, learning of the premature failure of a head gasket on a certain manufacturer’s compressor engine can be cross-referenced across all installations of that gasket throughout the world within minutes. The impact on predictive maintenance of such data sharing is invaluable.

Historical analysis of compressor engines, pumping components and even flow improvers injected into the pipeline can now be used with AI tools to anticipate issues before they arise. Maintenance reports, including all the parts and consumables (not just generic part information, but specifics about the manufacturer, fabrication materials, etc.) can yield effective predictive measures for pipeline operations.

Many times, the maintenance data for remote locations will be rudimentary in the amount of detail captured. However, this doesn’t mean that data are any less valuable. The cloud has the computing power to quickly iterate and find the right AI model to build basic work order data into a predictive total cost of operation model.

Take for example a Weibull analysis. Developed in the early 20th century by Waloddi Weibull, it focuses on industrial analysis of equipment to determine what’s reliable and unreliable.

With a relatively small amount of basic work order data, the Weibull model has proven effective to provide insights into the likelihood and quickness of failure and failure mode (i.e., early burnout, random failure, old age wear out). Scaling an analysis like this, starting even with a small historical data set, combined with the maintenance and operational costs can yield optimal maintenance curves superior to standard historical maintenance analysis.

Sometimes the value of AI is simply in the identification of outliers. Take for example an analysis typically performed by a human, in which experience allows the human to scan through reams of data and find the anomalies that need further investigation.

Typically, the traditional learning process is to have someone apprentice with the experienced analyst to learn how to look at the data. With machine learning and AI, a computer can “watch and learn” to spot the anomalies and flag the data for further investigation by the experienced analyst. This use of advanced technology is typically easier for users to accept – they see the “machine” as helping them do their job better instead of viewing it as a threat to take their job.

Most importantly, any push forward into machine learning and AI must start with the right questions. What are you trying to answer and what’s the business value? While the trendy mindset in technology delivery today is Agile development and being comfortable with “failing fast,” you still need to ensure you’re failing fast in the right direction.

Are you trying to reduce maintenance costs? Maybe using the geological data and satellite images could reduce in-person inspection costs. Or, are you trying to extend the life of key pumping/compression assets through smart monitoring and preventative maintenance?

Once identifying the correct question to answer, you must determine the correct data to analyze. It may not even be data about that pipeline, but another that has similar properties, location and other traits. Ideally, your maintenance activities are in place to prevent failure – of a compressor, a tank, a valve or a pipeline segment wall. Using all data at your disposal allows AI tools to predict what properties exist before a breach and tell you if and where those criteria are present.

With the legal challenges mounting on pipelines across the continent, including the recent shutdown orders for the Dakota Access Pipeline, preventing downtime and environmental disasters may warrant sharing industry maintenance and inspection data across company lines. By multiplying the inspection and maintenance dataset many times, we may identify methods for virtually eliminating most all emergency maintenance activities. This type of data sharing is unprecedented today, but it may be the smart move going forward.

Authors: Rob Roberts is a director in Opportune LLP’s Process & Technology practice. He has more than 20 years of experience in the energy industry (upstream, downstream, oilfield services), focused on the delivery of mid-to-large-scale ERP implementations involving process optimization, system integration and application automation. Roberts holds a BBA in management information systems from Texas A&M University and an MBA in international business from Our Lady of the Lake University.

Steve Roberts is a director in Opportune LLP’s Process & Technology practice. He has more than 20 years of experience consulting in the energy industry providing clients with trading and risk management process and system implementation, supply chain optimization, asset acquisition integration and business analytics. Roberts holds a bachelor’s degree in chemical engineering from Texas A&M University.

Comments